It will probably throw other errors that I can't envisage. It will almost certainly throw errors if you select cells without hyperlinks, or select multiple columns. Set value of cell nuCellAddress to trimmedHyperlink2 -place trimmed URL in cell to right Set nuCellAddress to nuColumnName & nuCellRow Tell activeTable -find address of cell to right of original cell Set trimmedHyperlink2 to text item 1 of trimmedHyperlink1 - chop off the back, result is the clean URL Set AppleScript's text item delimiters to "\"," - first characters after clean URL Set trimmedHyperlink1 to text item 2 of theFormula - chop off the front chunk Set AppleScript's text item delimiters to "=HYPERLINK(\"" - front chunk Tell application "Numbers" to set theFormula to formula of eachCell - get the formula as a string, parse the string to extract the URL Set nuColumn to (add column after item 1 of theColumn) - create a new column in which to place the URLS Set theColumn to column of selection range Set theCells to cells of selection range - get a list of the selected cells

Tell activeTable - get information from the active table Tell document 1 to tell active sheet to set activeTable to first table whose selection range's class is range - define the working area Set AppleScript's text item delimiters to "" - clear existing tids Here's a column of hyperlinks, first formula displayed: I suspect there are slightly less convoluted ways of doing this, but there's currently a bug in the AppleScript implementation of 'selection range' which makes things a bit more cumbersome, and it's late on a Saturday night. If you do this often you can consider putting the script in the script menu.

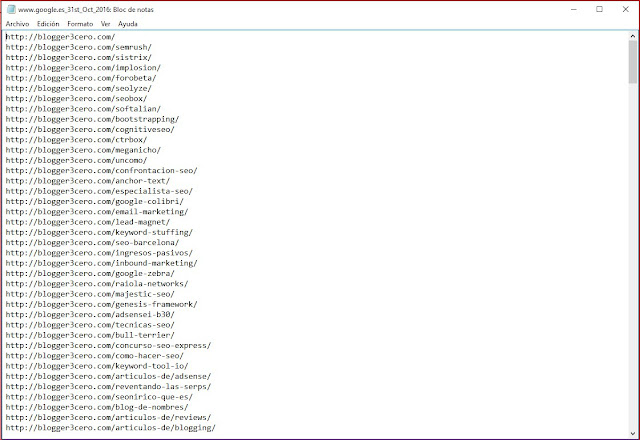

#Extract all links from page software#

This method is extremely fast and I use these in Bash functions to format the results across thousands of scraped pages for clients that want someone to review their entire site in one scrape.Here's an updated version of my "short" script that got mangled by the forum software in the older thread:

Once the subset is extracted, just remove the href=" or src=" sed -r 's~(href="|src=")~~g' For example, you may not want base64 images, instead you want all the other images. Once the content is properly formatted, awk or sed can be used to collect any subset of these links. The awk finds any line that begins with href or src and outputs it. The forward slash can confuse the sed substitution when working with html. This is preferred over a forward slash (/). Notice I'm using a tilde (~) in sed as the defining separator for substitution.

The first sed finds all href and src attributes and puts each on a new line while simultaneously removing the rest of the line, inlcuding the closing double qoute (") at the end of the link. curl -Lk | sed -r 's~(href="|src=")(+).*~\n\1\2~g' | awk '/^(href|src)/,//'īecause sed works on a single line, this will ensure that all urls are formatted properly on a new line, including any relative urls. I've found awk and sed to be the fastest and easiest to understand. I scrape websites using Bash exclusively to verify the http status of client links and report back to them on errors found.

0 kommentar(er)

0 kommentar(er)